It’s time to say goodbye to 'functionally equivalent artificial neurons'

By Alfredo Parra-Hinojosa

April 26, 2025

The idea that we can substitute carbon-based neurons with functionally equivalent artificial neurons (made of, say, silicon) is at the heart of many discussions about consciousness. For me, this used to be a load-bearing argument for caring about digital consciousness, and I think it still is for many people, including at Anthropic. However, it doesn’t withstand closer scrutiny, so I think it’s time to let go of this dearly beloved intuition.

This post was inspired by the video “Consciousness Isn't Substrate-Neutral: From Dancing Qualia & Epiphenomena to Topology & Accelerators” by Andrés Gómez-Emilsson, but it’s written in my personal capacity.

Cartoon neurons

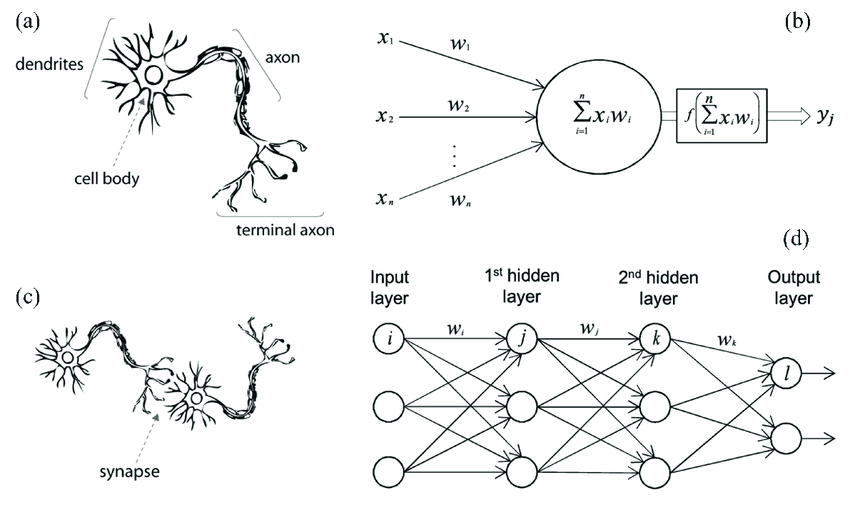

Anesthesiologist Stuart Hameroff likes to speak of “cartoon neurons”—the overly simplified abstraction of neurons as switches that take in some inputs and fire (or not) depending on some activation function.

He calls this abstraction “an insult to neurons”:

Why are they an insult? Well, if you think, they're cells. Neurons are cells, right? And a single cell paramecium can swim, learn, avoid predators, find food, mates and have sex.

I think intra-neuron sex has not yet been documented, but the point still stands. As I wrote in a previous post:

Single-cell organisms are thought to be capable of learning, integrating spatial information and adapting their behavior accordingly, deciding whether to approach or escape external signals, storing and processing information, and exhibiting predator-prey behaviors, among others. Attempts to use artificial neural networks have been shown to be “inefficient at modelling the single-celled ciliate’s avoidance reactions.” (Trinh, Wayland, Prabakara, 2019). “Given these facts, simple ‘summation-and-threshold’ models of neurons that treat cells as mere carriers of adjustable synapses vastly underestimate the processing power of single neurons, and thus of the brain itself.” (Tecumseh Fitch, 2021)

Other processes happening at the neuronal level include dendritic computation, cytoskeletal dynamics, ephaptic coupling, oscillatory behaviors, possible quantum effects, ultraweak photon emissions, and many more.1

But does it matter that neurons are actually more complex than the cartoon model suggests? For instance, one could argue that:

- All the additional complexity is not relevant, so it’s fine to ignore it; or

- With sufficiently advanced technology, time, and dedication, we should be able to make artificial neurons (or simulations thereof) with an arbitrary level of detail.

I think none of these two arguments hold up.

Abstracting away complexity

Regarding (1):

Given the list of neuronal processes listed above, the burden of proof should be on whoever argues that all those processes can be ignored. For example, ephaptic coupling shows us that the electric field in the brain plays a causal role, and this type of interaction is not modeled in neural networks.

One could respond that such field interactions could, in principle, be modeled within existing neural network frameworks (e.g. through careful parameterization or new connection topologies). However, even if it was possible to reproduce the input/output behavior correctly (which I doubt, for reasons outlined later), getting the runtime complexity also right adds an entirely new dimension of computational requirements that make “functional equivalence,” I believe, impossible. I made this point in a previous post, but the basic idea is that the physical substrate used for computation (e.g. the electromagnetic field) determines how quickly and efficiently computation happens, in some cases allowing for near-instantaneous information processing, which matters for the web of causality. Imagine a self-driving car that can correctly recognize pedestrians but does so in seconds instead of milliseconds. This, I believe, goes all the way down.

The presence of electromagnetic phenomena in the brain (which can allow for near-instantaneous information processing) should be enough to make you at the very least very skeptical of the notion of functionally equivalent artificial neurons. But does it get more complicated? For example, are quantum effects also present in the brain?

In a sense, obviously yes:

I don’t think it’s even necessary to debate whether quantum phenomena manifest somehow at the macro level of the brain (whatever that even means), e.g. as suggested by Penrose but disputed by Tegmark. This is because quantum mechanics underlies all brain phenomena, so it necessarily partakes in the causal chain. Ignoring this when discussing functional equivalence is question-begging. And the debate over whether quantum phenomena manifest at the macro level is far from settled.2 In fact, one could even argue that we already have beautiful proof of such macro-level effects from the field of anesthetics: Xenon isotopes with nonzero nuclear spin are much less effective anesthetics than isotopes without nuclear spin. And spin is, of course, a quintessential quantum property.3 4

A strong intuition behind wanting to ignore the added complexity is that neural networks (based on the cartoon model) are universal function approximators, and they seem to be working exceedingly well in machine learning. But just because a neural network is a universal function approximator doesn’t automatically mean that it is an appropriate substrate for consciousness. These are completely different problems. A priori, there should be no reason to abstract away all the complexity (and, in fact, I suspect some of the things LLMs struggle with, such as visual reasoning, are precisely the result of ignoring that extra complexity).5

Simulating the brain

Chalmers’ “dancing qualia” thought experiment (which relies on the notion of functionally equivalent artificial neurons) is often used to argue that consciousness is substrate-independent, which in turn implies that sufficiently detailed brain simulations could be conscious. Many people working on AI consciousness find this view compelling. But what counts as “sufficiently detailed”? What’s the appropriate level of abstraction at which we can ignore additional, underlying complexity?

Consider the following fluid dynamics simulation:

These sorts of fluid simulations can be extremely compute-intense but, if done properly, can model the underlying physical phenomena (flows, turbulence, etc.) really well. And, importantly, it’s totally fine to abstract away a lot of complexities! For example, many fluid models don’t require simulating the individual atoms, let alone the subatomic particles within those atoms. Can’t we do something similar with the brain?

Well, yes and no. Such a simulation, while useful, will have (by design) a narrow domain of validity, dictated by the underlying mathematical model and the granularity of the simulation. Introduce additional effects or constraints and the simulation stops being adequate. For instance, the simulation above won’t tell you what happens to the fluid at super high temperatures, or when it interacts with certain chemicals, or when you shoot a beam of electrons through it. You won’t get the full picture unless you simulate the system at the deepest level.

Sufficiently detailed replicas/simulations

Regarding (2) (i.e., that we should be able to make artificial neurons or simulations thereof with an arbitrary level of detail with sufficiently advanced technology, time, and dedication):

One could argue that, within a simulation, all that matters is the relative timing of events rather than absolute speed. So even if certain physical substrates can accelerate computation, we could just take our time until we get the relative timing of all the events right. However, past a certain level of detail, given that some fields of physics propagate at the speed of light and interact with each other in such intricate ways, you’ll eventually get to a point where you’d have to wait longer than the age of the universe to simulate even certain simple systems. If your argument requires asking to be given the age of the universe, I think you should reconsider your position.

The very final attempt to save this line of thinking is to claim that reality is already binary at the deepest level, and so maybe one day we’ll find a way to use that deepest substrate for computation. Andrés has already addressed this point eloquently, so I’ll just paraphrase.

Here is usually how the argument tends to go: “OK, sure, assume that you need quantum mechanics or topological fields to explain the behavior of a neural network in the brain. Why can't we just simulate that? Fundamentally, reality is already binary, made of tiny Planck-length ones and zeros in this massive network of causality, and that is deep reality. And so if that deep reality is capable of simulating our brains at an emerging level, and that creates our consciousness at an emerging level, then clearly some kind of fundamental non-binary unity at the lowest levels of reality is not necessary, right?” This is question-begging. This is something you're assuming. It is open to interpretation. Actually, physics—the facts—do not imply that. Quite on the contrary. For example, string theory postulates that the building blocks of reality are topological in nature, meaning that topological computing could be at the very base layer of reality.6 And in that sense, no, reality would not be made of points interacting with each other at the Planck length.

Takeaways

In summary: The physical substrate used for computation matters for speed of computation. The brain’s substrate is no exception. Arguments that brush away this fact end up being question-begging. If you want to reproduce the full causal behavior of, say, a laser beam, the only way to do so is by using an actual laser beam.7

The position I’m trying to defend here is often portrayed very uncharitably: “Some people believe consciousness requires biological neurons!” The pejorative term “carbon chauvinist” further cements this narrative.8 But I think such use of language oversimplifies the complexity of our physical reality.9

Rejecting substrate independence strongly challenges consciousness claims regarding:

I hope the ideas in this post make you at least question your assumptions about the validity of computational functionalism as an approach to consciousness. If not, then perhaps the binding/boundary/integrated information problem or the slicing problem will. To me, the evidence and arguments against computational functionalism are just overwhelming.

So what’s the alternative? I currently think it involves endorsing the notion that the fundamental fields of physics are fields of qualia. While this is an unintuitive idea with other unintuitive implications, such an ontology is, I think, much better suited to tackle the problems that a successful theory of consciousness should meet.10

Footnotes

-

Claude adds: Synaptic plasticity, retrograde signaling, gene expression regulation, protein synthesis at synapses, astrocyte interactions, extracellular matrix interactions, molecular memory, metabolic signaling, and intrinsic excitability changes. ↩

-

I personally give some credence to the idea that quantum entanglement may be the underlying mechanism behind phenomenal binding, as argued by Atai Barkai. ↩

-

See also the talk “Spintronics in Neuroscience” by Luca Turin at The Science of Consciousness conference 2023. ↩

-

Even more trivially, neurotransmitters are just molecules, so their behavior is at least in part dictated by the laws of quantum mechanics. ↩

-

For example, the brain might be using the electromagnetic field for nonlinear wave computing. LLMs might still be able to brute force visual reasoning to some extent as they become more advanced, but they still won’t be conscious. ↩

-

String theory may turn out not to be correct, but the argument doesn’t depend on string theory in particular. There are other physics ontologies that challenge the “it from bit” assumption. ↩

-

I was tempted to include a midwit meme here, with the left and right extremes showing the text “A simulation of a thing is not the thing itself,” but I don’t endorse this form of antagonism. ↩

-

Personally, I much prefer “non-materialist physicalist”. ↩

-

It’s kind of like saying “I don’t need a laser pointer! I can point at things just fine with this stick.” But maybe “ability to point at things” was never the point to begin with (and even if it was, using a laser would let you point at many more things and much faster). ↩

-

Cf. Johnson’s Principia Qualia, p. 21. ↩